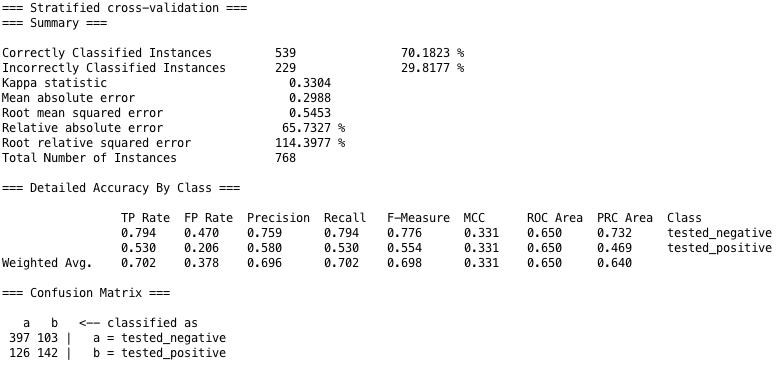

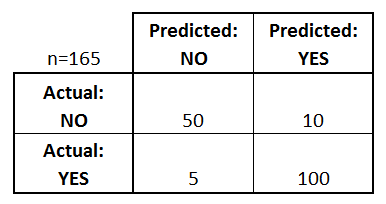

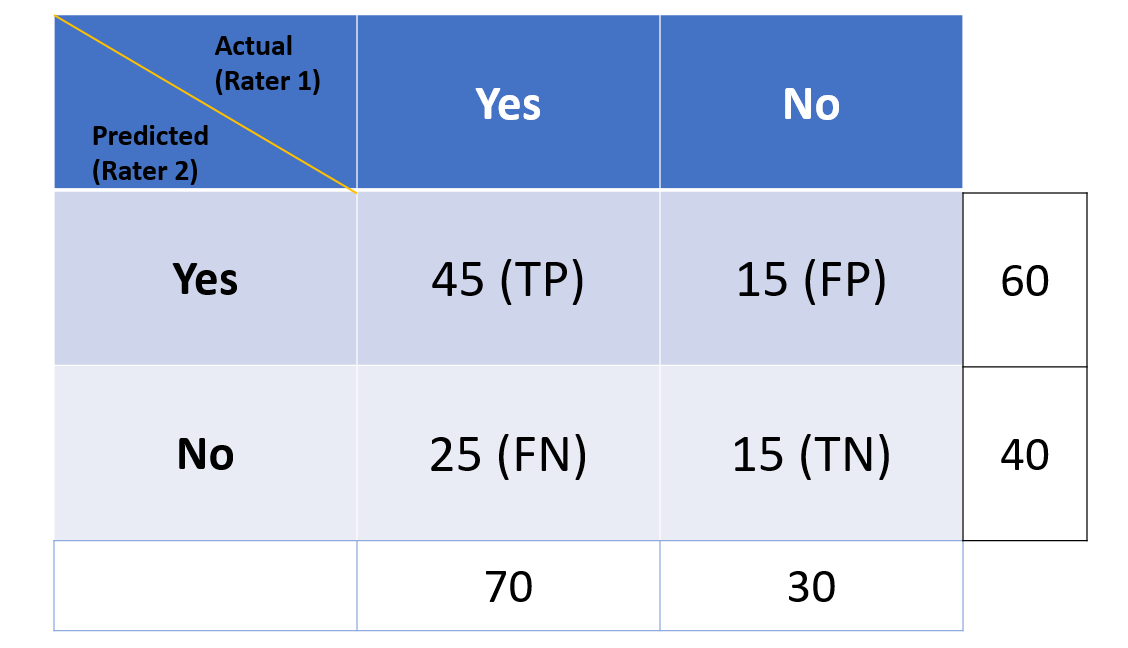

Matthews Correlation Coefficient is The Best Classification Metric You've Never Heard Of | by Boaz Shmueli | Towards Data Science

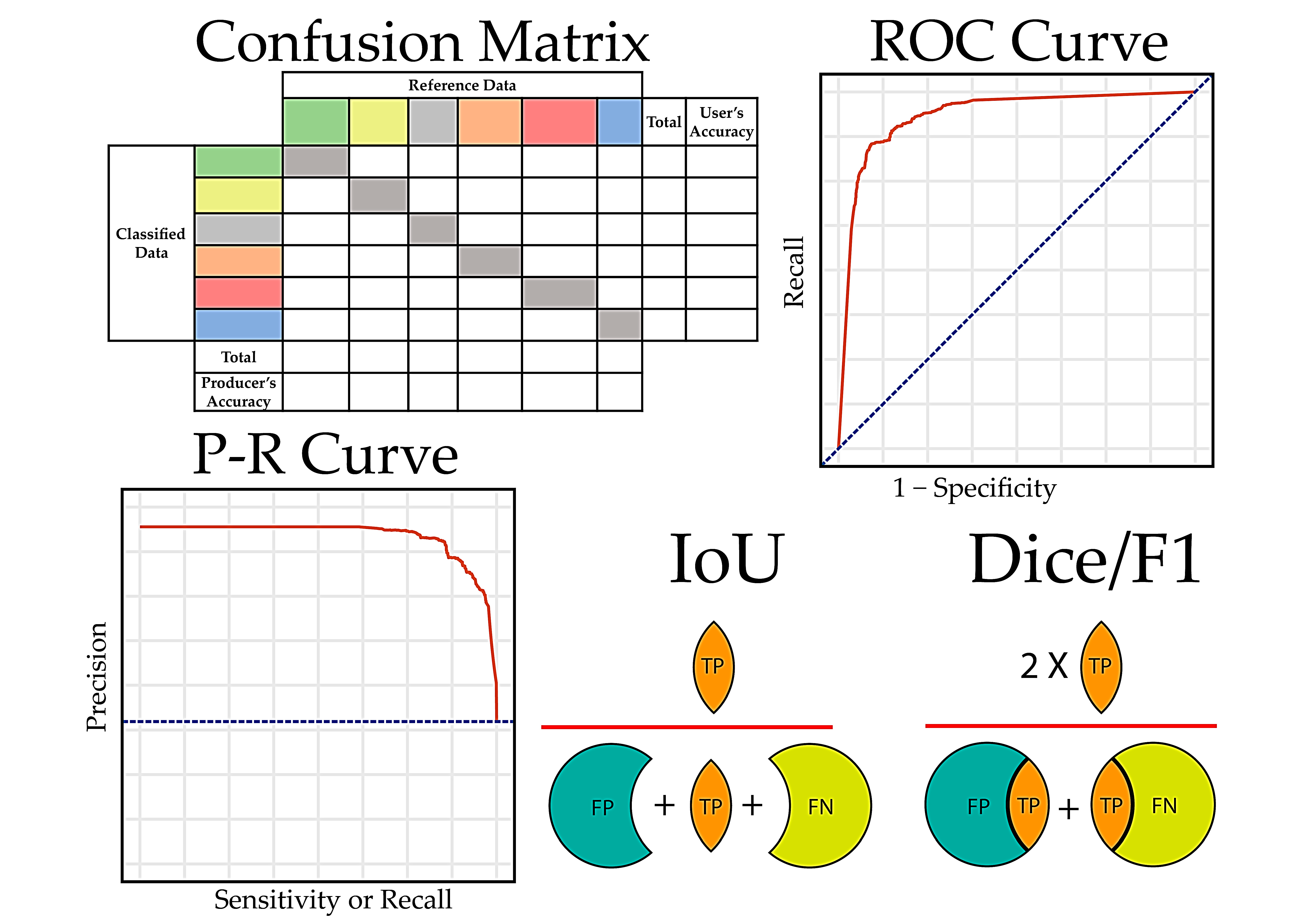

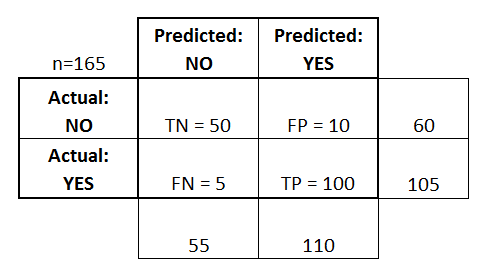

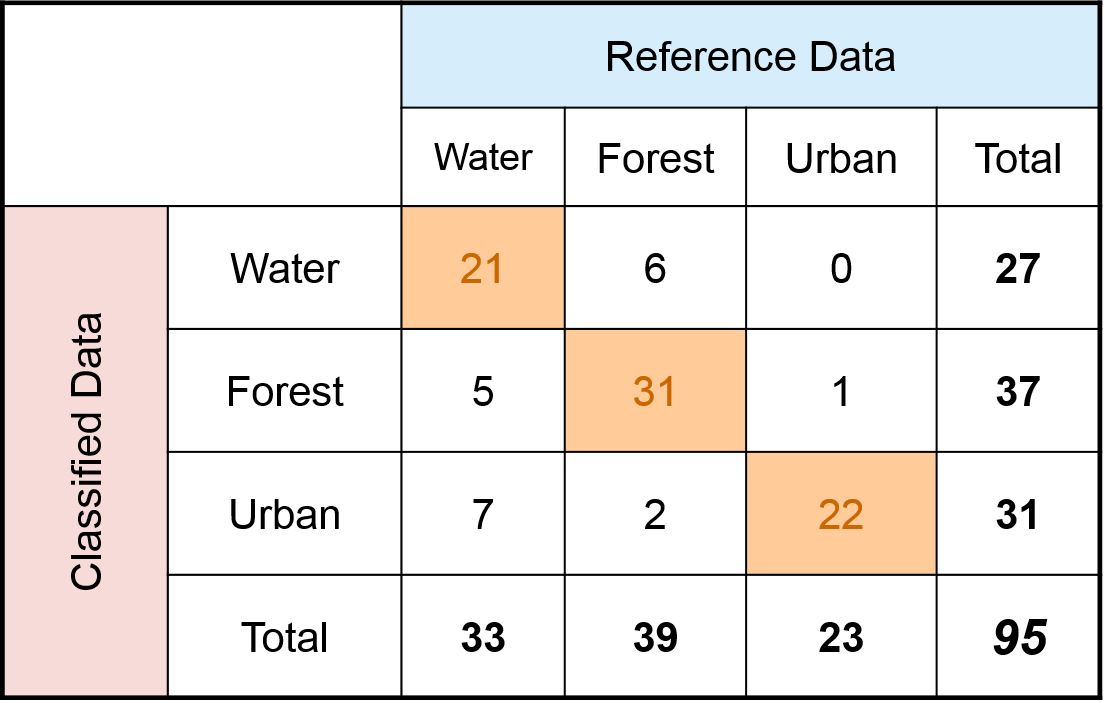

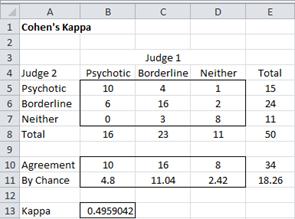

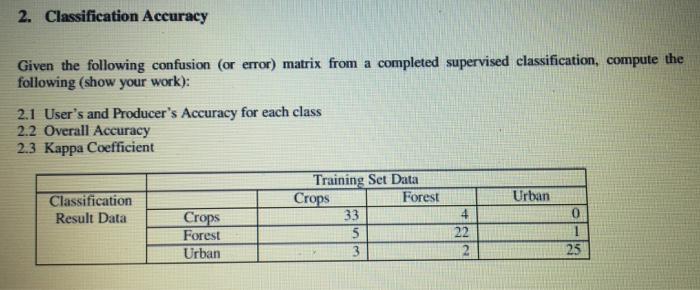

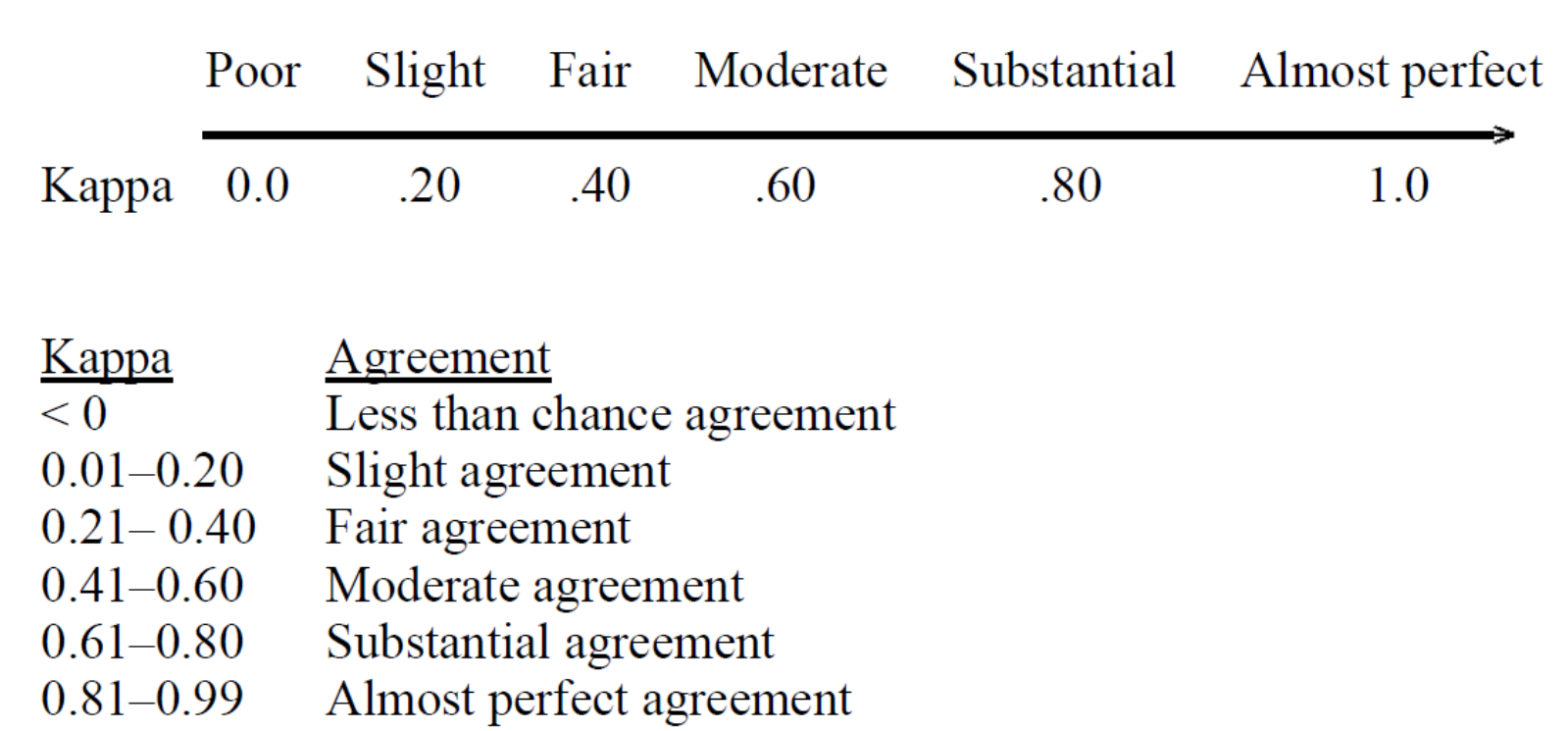

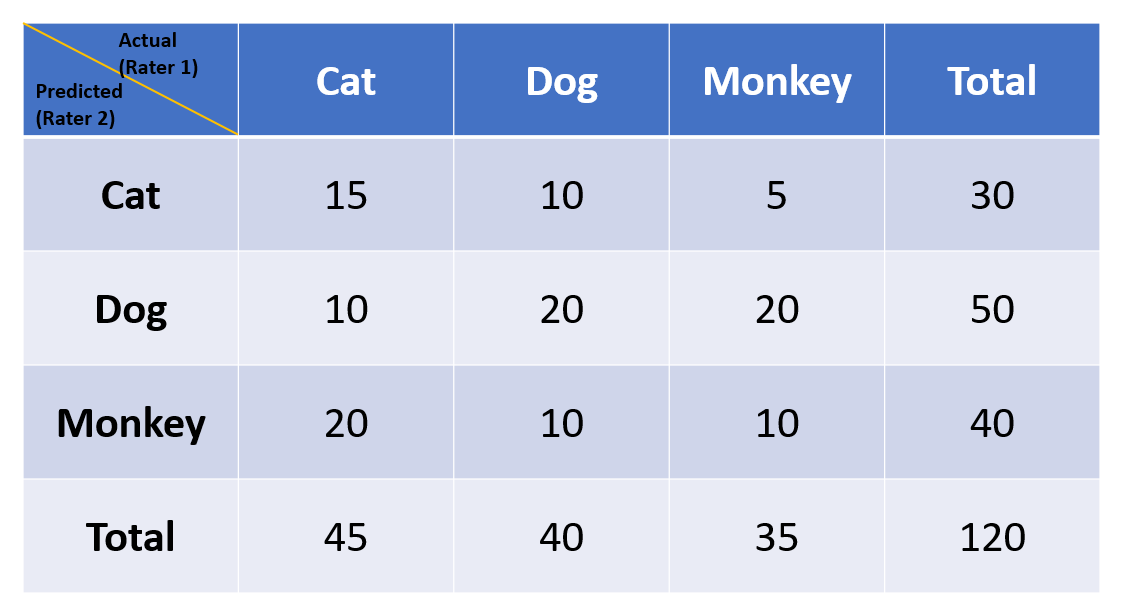

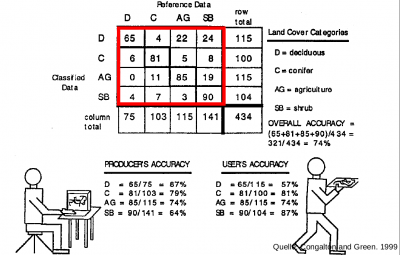

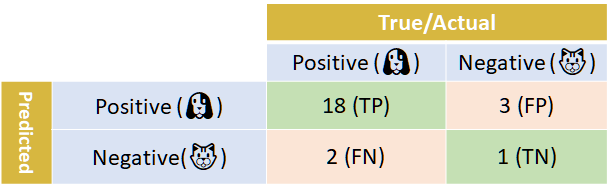

Metrics to evaluate classification models with R codes: Confusion Matrix, Sensitivity, Specificity, Cohen's Kappa Value, Mcnemar's Test - Data Science Vidhya